No JavaScript projects were harmed in the making of this post. All the use cases are hypothetical. All the code and pain are real.

Devs should never trust input for reliability and security reasons. Even more so in weakly typed languages like JavaScript, as the fallout from implicit casting can be quite unpredictable. BTW, it’s also applicable to TypeScript, as it might give you a false sense of type safety in runtime (and it was never an intention of the TypeScript team, see their non-goals in wiki).

And as for the output… Well, the behaviour is documented but who reads the docs?😉

In this brutal post we’ll check out cool examples and give out some slaps for sloppy practices (so, you know, devs would write a better code). And yes, JavaScript’s strict mode wouldn’t help here.

1. Check for NULL

Many JavaScript devs have learnt to be gentle with null values in a hard way. And that experience is usually coming from writing if conditions like these:

null == 0; // false

null > 0; // false

// BUT

null >= 0; // true

null <= 0; // trueHere we go. A passed and unchecked null value can derail the logic in a simple comparison operator.

How come?

See detailed explanation in this StackOverflow post. In short:

the last cases force the

nullto be interpreted in a numeric context, so it’s treated like0.

The same happens when using the addition operator:

+null; // 0

null + null; // 0

null + 1; // 1Though, it doesn’t happen to undefined:

undefined + undefined; // NaN

undefined + 1; // NaNMitigation

Remember - In Input We Don’t Trust.

Always check input for null and undefined.

There is no magic, see implementation of isNil() from lodash library. It’s just

function isNil(value) {

return value == null

}2. Check type for implicit string conversion

How easy is to rely on automatic type conversion! It works. Well, most of the time… Until you use a received value with a basic arithmetic operation.

Pitfalls in adding / subtracting are so prevalent in JavaScript that they are even described in wikipedia:

2 + []; // "2" (a string)

2 + [] + 1; // "21" (still a string)

2 + [] - 1; // 1 (a number)

-[]; // 0

// For explicit strings

"bla " + 1; // "bla 1"

"bla " - 1; // NaN

// BUT for a non-empty array

2 + [0,1]; // "20,1" (a string)

2 + [0,1] + 1; // "20,11" (still a string)

2 + [0,1] - 1; // NaN

-[0,1]; // NaNSure, who would be that silly to add an array to a number? Unless that array came from the outside instead of a number…

And what about a standard JavaScript method returning an outright wrong result?

parseInt(0.00005); // 0

parseInt(0.000005); // 0

parseInt(0.0000005); // 5 WTF?How come?

Clearly, JavaScript is quite liberal in handling arrays (especially, empty arrays)… and objects… and the rest. And not many know the rules:

- The binary

+operator casts both operands to astringunless both operands are numbers. - The binary

-operator always casts both operands to anumber. - Both unary operators (

+,-) always cast the operand to anumber.

Feel better? Now this example with objects is going to be just obvious 🙃:

{} + true; // "[object Object]true"

!{} + true; // 1

!!{} + true; // 2

!!{} - true; // 0OK. Here is the same trick but slowly:

!{}; // false

!!{}; // true

true + true; // 1 + 1

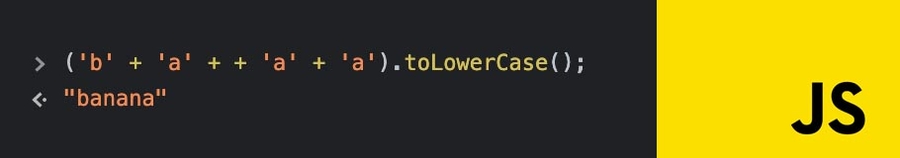

true - true; // 1 - 1The same idea behind a popular trick of writing ”banana” (link):

'b' + 'a' + + 'a' + 'a'; // baNaNaHope, you feel better. But what about the parseInt(0.0000005) situation?

It’s caused by implicit conversion of the input parameter to a string that becomes more visible in this example:

String(0.000005); // '0.000005'

String(0.0000005); // '5e-7'But the e-notation is not honoured by parseInt (as it can be concluded from the docs) that takes the first digits and dismisses the remaining other symbols. Therefore the following calls are handled in the same way:

parseInt(0.0000005); // 5

parseInt('5e-7'); // 5

parseInt('5bla'); // 5Mitigation

Remember - In Input We Don’t Trust.

Checking type of input values before using them with binary/unary operators can be a lifesaver. E.g. use a humble isNumber() (from lodash) when expecting a number or isArray() for (hmm) arrays.

In case of parseInt, the input is expected to be a string, so an isString() call for the input value is in order and then you get no surprises:

parseInt('0.0000005'); // 03. RTFM

JavaScript has a very low barriers to entry. It feels like one can fire away writing the code without looking into the docs. It works at the start. Then later suffering from weirdly opinionated implicit string conversion begins.

In some cases, the obscure logic can be seen as convenience:

[,,,]; // ",,"

Array(3); // ",,"

// Unless you join it...

Array(3).join("| "); // "| | "Where conversion to a string uses a comma separator automatically.

In others – as insanity:

[0, -1, -2].sort(); // ['-1', '-2', '0']Very unexpected conversion to strings (though, well documented).

When sorting numbers is actually done by

[0, -1, -2].sort((a, b) => a - b); // For ascending: -2, -1, 0

[0, -1, -2].sort((a, b) => b - a); // For descending: 0, -1, -2Mitigation

JavaScript is old and well documented. Don’t get too self-confident and double check the Mozilla docs even for trivial stuff. The ECMAScript standard is good too but a bit harder to navigate.

Is everyone OK with it?

JavaScript has become very close to bare metal. It’s supported by any OS, many database servers (e.g. in Oracle, CosmosDB), and processors (ARM support). It runs everywhere, and one misstep in an edge case may lead to dire consequences…

So whatever JavaScript does is perceived simply as a necessary evil.

Some devs create educational resources like JsFuck.com. As the name suggests, it shows how to write a ‘proper’ JavaScript code.

Others, share such gems in a well-structured and comprehensive list of examples on GitHub.

And some just make fun. Like in this immortal lightning talk by Gary Bernhardt in 2012.

Where from here?

JavaScript evolves, we’re on the 12th edition already – ECMAScript 2021. Not that they’ve addressed criticism for implementation of those conversions, though… We’ve got asm.js and WebAssembly. Though, asm.js is 8 years old and still far from being the mainstream, when WebAssembly has been around for 1.5 years and is still a newbie here. Will see.

Interesting observation. In the JavaScript realm, most articles have a half-life of 6 months. It’s then they get eclipsed by new shiny things. The only outliers are posts about JavaScript pitfalls caused by dynamic typing and obscure casting. Those stay put for the whole 25-year history of JavaScript. So maybe (hope not) this post will live for another ¼ of a century.

Please share your thoughts in the comments below, on Twitter, LinkedIn or join the Reddit discussion.